AI Decisioning is a new class of marketing technology that delivers 1:1 personalization. Instead of relying on journeys or static segments, it uses AI agents to continuously learn which experiences drive the best outcomes for each customer. In this four-part series, we’ll explain some of the core technologies: reinforcement learning, multi-armed bandits, and contextual bandits. By the end, you’ll understand how these technologies fit together to create 1:1 personalization that works at scale.

The marketer’s dilemma: Stick with winners or test everything?

Imagine you’re launching a new email campaign with five potential subject lines. A traditional A/B testing approach would pit them against each other in pairs, analyze the results, and eventually crown a winner. The problem? That process could take weeks, and by the time you’ve found the best option, the campaign’s peak moment has likely already passed.

Now add some real-world complexity. You’re probably not just testing five subject lines—you also want to optimize:

- 5 subject lines

- 4 send times

- 3 offer types

- 2 creative templates

That’s 120 possible combinations. Even running tests in parallel, it could take months to find the best combination. Most marketers make the practical choice to test one or two variables at a time, hoping the rest falls into place.

But every underperforming email sent during a slow testing process is a lost opportunity for engagement. Over time, those missed chances add up to a poor customer experience that risks damage to your brand.

We don't need to choose between slow, comprehensive testing and fast decisions with limited data. Multi-armed bandits offer a smarter alternative.

Multi-armed bandits are a type of reinforcement learning used for experimentation and optimization that balances using proven strategies (exploitation) with testing new approaches (exploration). In our last post, we looked at how reinforcement learning enables AI agents to discover what works for individual customers through continuous experimentation at scale. Multi-armed bandits take this further—they don’t just learn what works, they intelligently decide when to use proven winners and when to test something new.

The classic multi-armed bandit problem

The name “multi-armed bandit” comes from a classic problem in machine learning. You walk into a casino and face a row of slot machines (“one-armed bandits”). Each machine can pay out differently, but you don’t know which pays best. With your limited money, how do you determine which machines to play and how often to maximize your winnings?

Image from wikimedia.org

This problem mirrors the challenge of marketing optimization. Each “arm” represents a choice about your marketing messages and their delivery:

- Subject lines: “Buy now!” vs. “Just for you”

- Send times: 9 am vs 3 pm vs 8 pm

- Creative templates: Product grid vs. video thumbnail

As with slot machines, you don’t know what will work best, so you gather data by pulling different arms and testing. Each test of a poor performer has financial and opportunity costs, which, in marketing, means lost engagement, lower conversions, and wasted opportunities.

Multi-armed bandits give you a systematic way to learn while still using what you know works. You explore and exploit at the same time, shifting resources to winning actions while still exploring new possibilities. This systematic approach to learning and optimization is a core component of AI Decisioning, enabling intelligent, data-driven choices for each customer.

How multi-armed bandits work in marketing

Multi-armed bandits solve this exploration-exploitation challenge by continuously adjusting allocation based on performance data. Rather than using set splits (50/50 or more complex splits in journeys), they shift send volumes dynamically as results come in, making thousands of small adjustments.

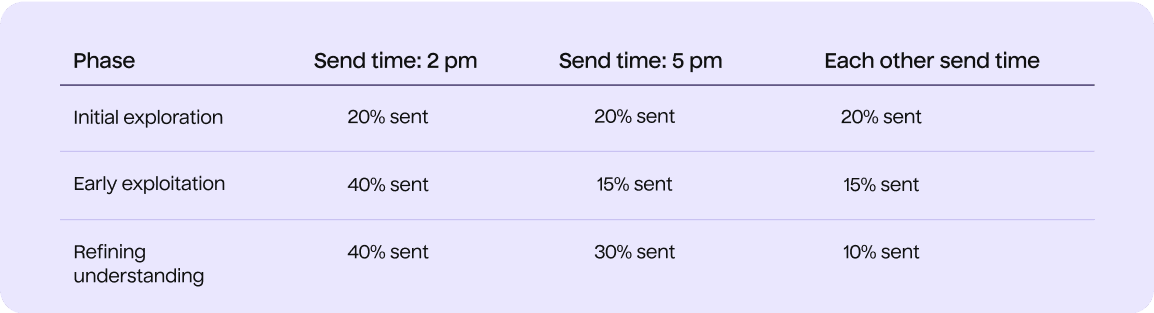

Here’s how that continuous learning process unfolds, using send time optimization as an example:

Phase one: Initial exploration

- Test five send times equally: 8 am, 11 am, 2 pm, 5 pm, 8 pm

- Each time is used for 20% of our audience

- We’re tracking opens, clicks, and conversions

As data accumulates: Early exploitation

- 2 pm shows 30% higher engagement than other times

- The bandit shifts send volume so that 40% of sends use 2 pm, with 15% to each other time

- The bandit continues to test while exploiting the early winner

With growing confidence: Refining understanding

- 5 pm becomes stronger (likely due to after-work browsers), while 8 am always performs poorly

- The bandit shifts send time allocation: 40% at 2 pm, 30% at 5 pm, and 10% for the other send times

Ongoing: Continuous optimization

- The bandit focuses on exploitation (80%+ to winning times)

- The bandit continues to explore other times

- With this exploration, the bandit can quickly adapt if customer behaviors change

Multi-armed bandits handle this intelligent allocation across options automatically and continuously, while managing thousands of variations.

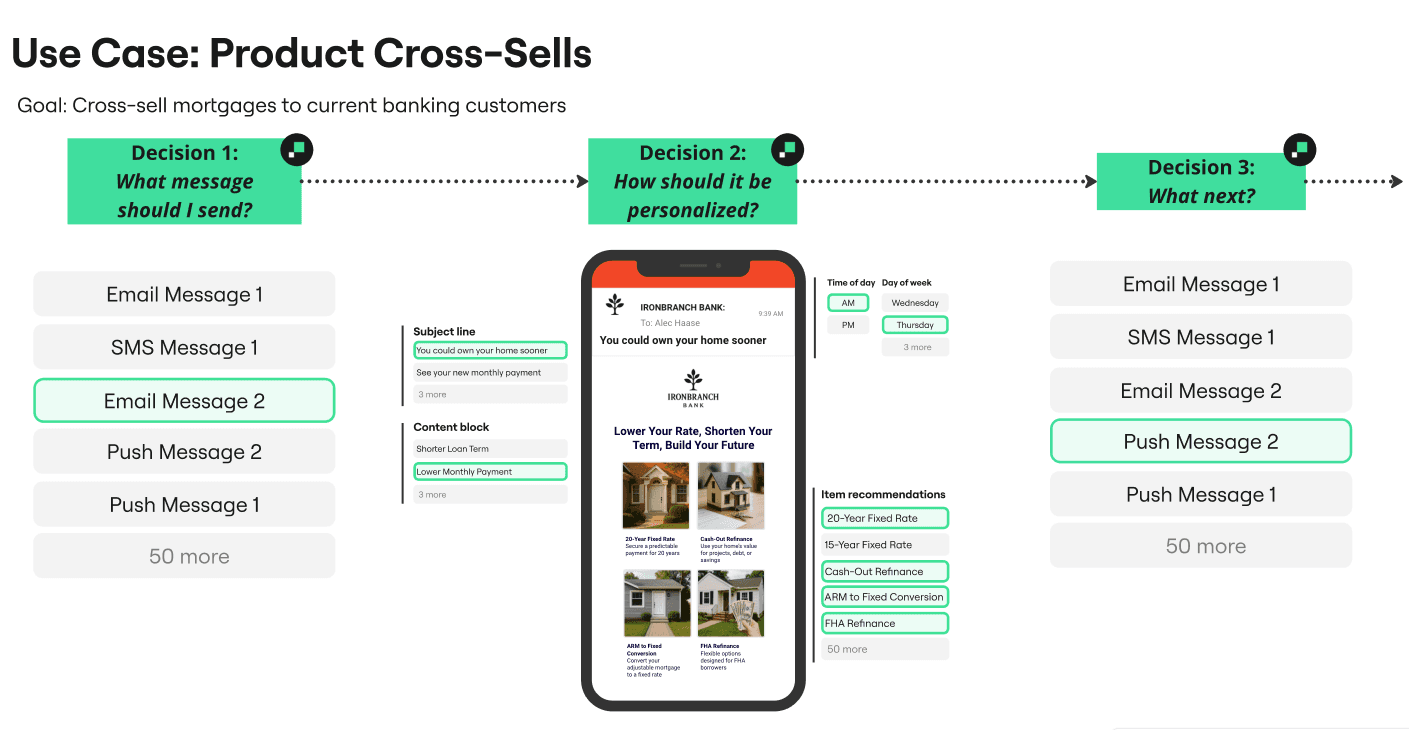

This send time example demonstrates multi-armed bandits in action, but it’s just the beginning. The breakthrough happens when you apply this approach to every marketing decision simultaneously: send times, subject lines, pre-headers, images, offers, channels, and more.

Then, you aren’t just optimizing one variable; you’re intelligently exploring thousands of combinations while dynamically optimizing for what works best.

How to balance exploration and exploitation

There’s more than one way to balance testing and performance. What we looked at above reduces the exploration over time, but different algorithms are available to adjust the balance.

They follow three general approaches:

Fixed exploration rate: The simplest approaches, like Epsilon-greedy, reserve a set percentage (such as 10%) for testing new options while mostly sending to current winners (90%).

Confidence-based: More complex approaches, like Thompson Sampling, explore more when the bandit is uncertain about results and exploit more as it builds confidence in what works. If a new subject line shows promise, the bandit tests it more. As performance patterns become clear, testing decreases.

Adaptive exploration: Other advanced algorithms, like SquareCB, adjust exploration rates based on the problem complexity and the data collected. They explore aggressively early on when learning quickly is important, and shift toward exploitation gradually as response patterns become clear.

Each of these approaches has strengths, and they enable companies using multi-armed bandits to find the best approach for their particular business case.

Multi-armed bandits in marketing

Multi-armed bandits let us rethink how we approach standard marketing challenges. They provide a way to test and optimize our marketing toward different outcomes in situations where we need faster and higher-volume experimentation.

Here are a few common uses in marketing:

- Email campaign optimization: Beyond subject lines, we can test any components within an email campaign, such as CTAs, visual elements, and layouts (like image vs. text-heavy layouts).

- Send time optimization: Optimize email, SMS, and push messages rather than relying on batch or segment-based sends, and adapt automatically to changes in the customer base or seasonality.

- Channel orchestration: Optimize your channel mix by message type and theme, testing whether promotional offers work better via email, educational content performs best through push notifications, or time-sensitive messages convert more through SMS.

- Offer strategy: Test offer amounts, types (percentage vs. dollar vs. BOGO), and related messaging, singly and in combination.

- Creative and content: Iterate on content as you determine what works, across images, social proof vs. product collections, value props, and message length.

While we’re calling out individual ways that a multi-armed bandit can optimize marketing, they become incredibly powerful when optimizing combinations of dimensions like content theme, offer type, send time, send frequency, channel preference, and more. Their impact only increases when deployed at the individual level and combined with individual customer data to achieve real 1:1 personalization.

These applications demonstrate why multi-armed bandits are essential to AI Decisioning; they provide the automated experimentation engine that makes 1:1 personalization scalable.

From better testing to true personalization

Multi-armed bandits provide a different approach to testing and optimizing marketing campaigns than the traditional A/B or segment-based tests. They find winning strategies faster, adapt to change automatically, and can scale beyond human capacities.

They’re still limited, though: they find the best option on average for all customers. What if that doesn’t work for Sarah, who only makes purchases on weekday evenings, or Marcus, who responds to new product announcements but ignores discounts?

In our next post, we’ll explore contextual bandits, the reinforcement learning approach that combines the intelligent exploration-exploitation strategies of multi-armed bandits with individual customer data. With contextual bandits, AI Decisioning can achieve true 1:1 personalization and make decisions based on what works for each customer.

If you're interested in AI Decisioning, unlocking more value from your existing marketing channels, and increasing the velocity at which you can experiment and learn, book some time with our team today!