AI Decisioning is a new class of marketing technology that delivers 1:1 personalization. Instead of relying on journeys or static segments, it uses AI agents to continuously learn which experiences drive the best outcomes for each customer. In this four-part series, we’ll explain some of the core technologies: reinforcement learning, multi-armed bandits, and contextual bandits. By the end, you’ll understand how these technologies fit together to create 1:1 personalization that works at scale.

Personalization has always promised more than it delivered. Marketers talk about "1:1 experiences," but the reality is usually segments and journeys—rough approximations of individual behavior that group people together by design.

In this series, we've been unpacking the foundational technologies that finally change that. We started by identifying the personalization gap, the disconnect between what customers expect and what current tools can deliver. Then we explored the AI technologies that solve it: Reinforcement learning showed us how AI agents can learn from experience through systematic experimentation. Multi-armed bandits taught us how to balance scaling successful tactics with testing new strategies. But standard multi-armed bandits have a fundamental blind spot: they optimize for the average customer.

Your customers aren’t average, though. Sarah, a high lifetime-value (LTV) customer, ignores every discount email you send. Marcus, a price-conscious shopper, clicks them all. Your "winning" campaign strategy works for Marcus while alienating Sarah.

This is where contextual bandits come in as the core technology in AI Decisioning. Contextual bandits incorporate individual customer data—their context—into every decision. This context includes all the rich customer data you're already collecting: purchase history, browsing behavior, engagement patterns, demographics, lifecycle stage, and preferences. Instead of asking "what works best for everyone?" contextual bandits ask "what works best for this person, right now?"

Contextual bandits are the technology that transforms 1:1 personalization from a promise to a reality.

How contextual bandits work

Contextual bandits add the critical layer of individual customer data to multi-armed bandits. Here’s how they use context to make personalized decisions.

From data warehouse to customer context

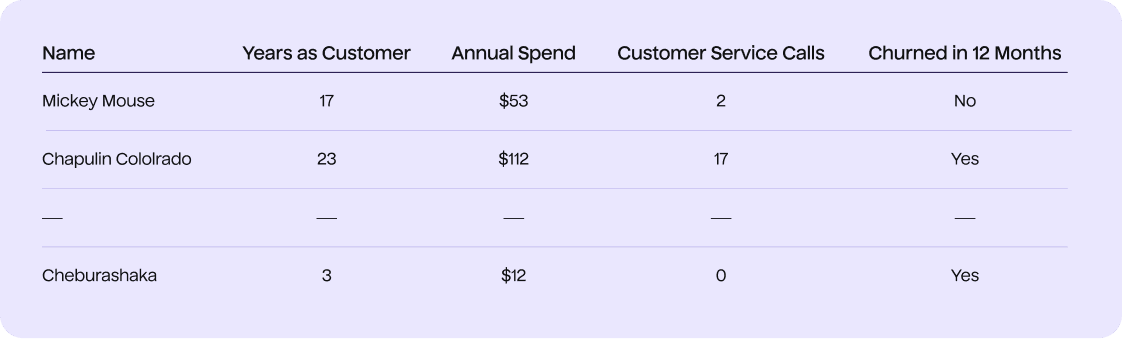

Your customer data warehouse already contains the information that contextual bandits need to make individual decisions. During the bandit decision-making process, this data is structured into a customer feature matrix where every row represents a customer, and each column represents a different attribute or behavioral property.

The system determines which features are most significant for predicting outcomes for each individual.

The prediction engine: From customer data to personalized decisions

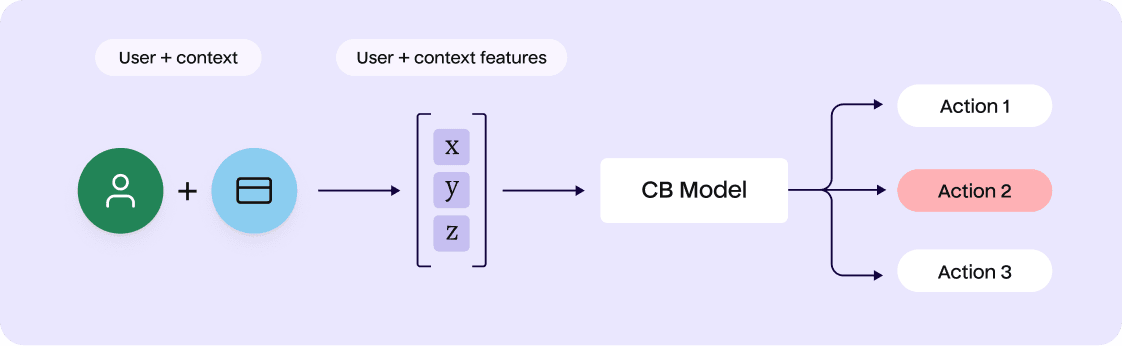

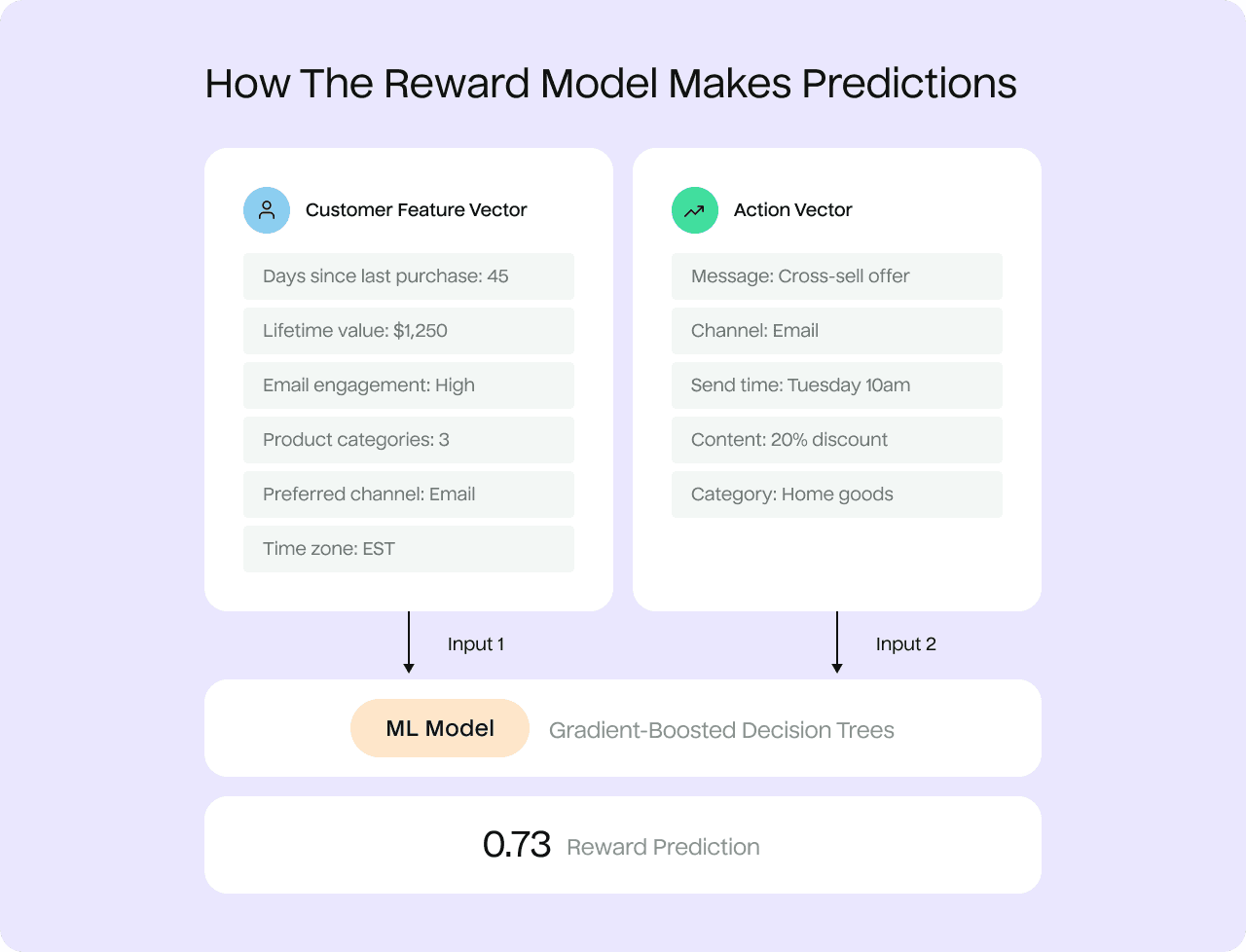

Here’s where machine learning happens. Contextual bandits use your customer feature matrix, possible actions, and a machine learning model to predict the expected reward for each option.

Let's walk through this process step by step:

- Customer context input: The contextual bandit takes a customer's data profile (e.g., days since last purchase: 45, lifetime value: $1,250, email engagement: high, etc.)

- Action options: It considers possible dimensions of an action and how to combine them: what message type to send (cross-sell offer), which channel to use (email), when to send it (Tuesday at 10 am), which offer to include (20% discount), and which product category to focus on (home goods).

- ML model processing: The AI model analyzes how this customer's profile matches up with the combinations of these action dimensions to predict the outcome. It uses gradient-boosted decision trees, a type of machine learning that's particularly powerful for marketing because it excels at finding complex, multi-layered patterns like "customers who browse furniture after buying computer equipment respond best to home goods offers on weekend evenings.”

- Reward prediction: The model outputs a prediction score (e.g., 0.73) indicating how likely the action—this specific combination of dimensions—is to drive the desired outcome for this specific customer

- Decision-making: The contextual bandit selects the optimal combination across dimensions (e.g., content, send time, channel) with the highest predicted reward for that individual.

The model’s continuous learning—where every customer interaction feeds back into the system to refine future decisions—enables real 1:1 decision-making that improves over time.

Individual decisions in practice

Let’s see how contextual bandits handle Sarah and Marcus, our two customers with significantly different preferences and behaviors:

For Sarah (premium customer):

- Context: High lifetime value, never opens discount emails, responds to "exclusive" messaging, engages weekend mornings

- Decision: Send "Exclusive preview: New collection" on Saturday at 9am

- Outcome: Sarah opens because it feels curated for her preferences

For Marcus (price-conscious shopper):

- Context: Moderate purchase frequency, high discount engagement, clicks promotional emails, active Wednesday evenings

- Decision: Send "Limited time: 50% off your favorites" Wednesday at 7pm

- Outcome: Marcus clicks because the timing and offer match his patterns

The execution of the campaign is different for each customer, based on their context.

Continuous learning at two levels

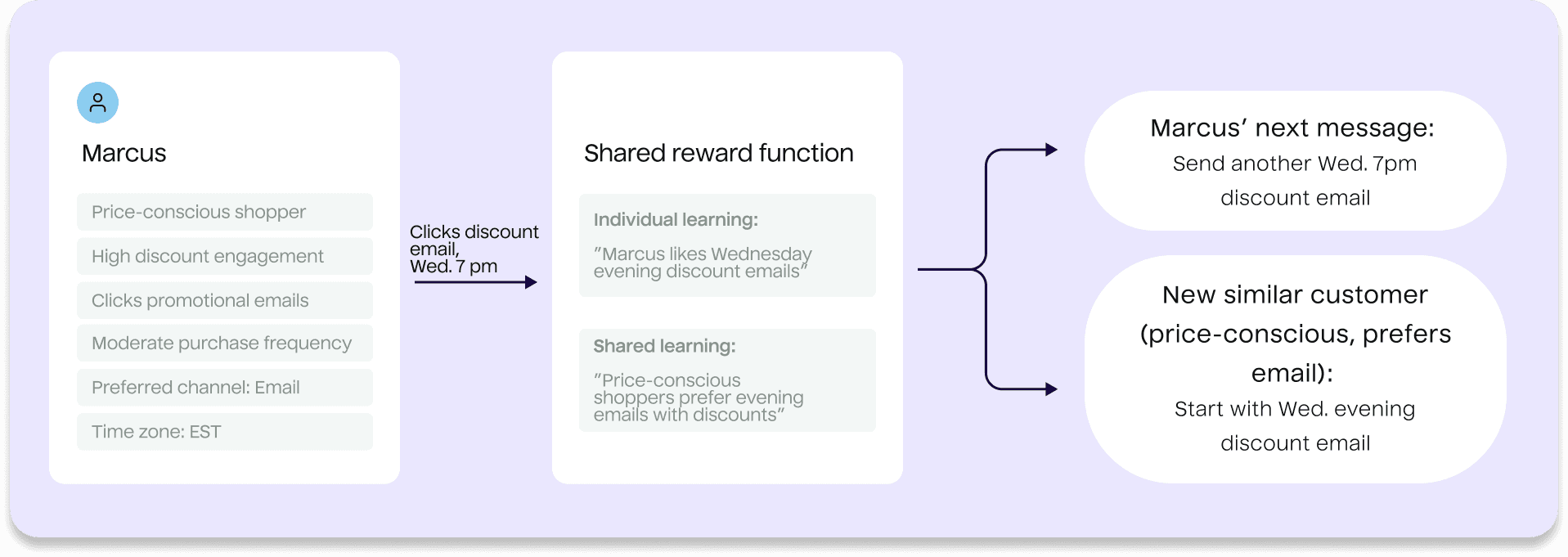

Like multi-armed bandits, contextual bandits learn from every interaction. They’re especially effective because they learn at both the individual and audience levels, through a shared reward function.

Audience-level learning: When Marcus clicks a discount email on Wednesday evening, the bandit doesn't just learn about Marcus, it updates its understanding of how similar customers (price-conscious, moderate purchase frequency, evening engagement) respond to discount offers. These audience-level patterns inform decisions for customers with similar characteristics.

Individual-level learning: At the same time, the bandit builds a sense for Marcus as an individual, noting his behavioral patterns that might differ from the broader audience.

Shared reward optimization: The contextual bandit uses a single reward function. This means discoveries about what drives outcomes get applied broadly while individual exceptions and preferences are preserved.

This dual learning lets the system make good decisions for new customers based on patterns across all customers (addressing the cold-start problem), while continuously refining its understanding of the individual’s preferences.

This technical advance in learning enables a shift in how marketing personalization works.

From approximation to precision

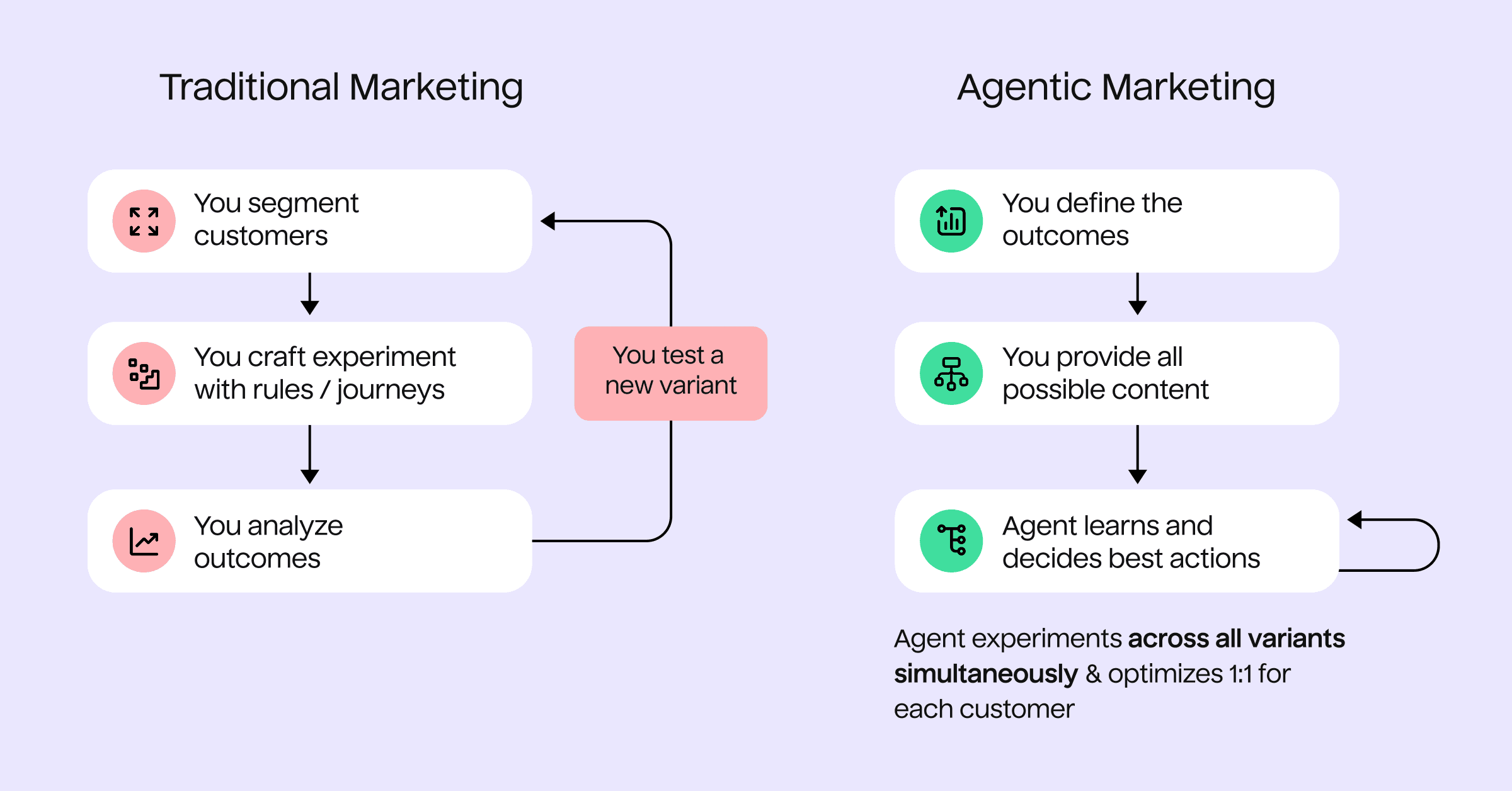

Contextual bandits represent a shift from segment-level lifecycle marketing, like journeys and batch emails, to precise personalization.

Traditional approaches to journeys and marketing campaigns leverage increasingly smaller segments to approximate individual preferences. Even granular segmentation makes assumptions within each group, though.

Contextual bandits remove the approximation by learning and responding to individual patterns.

Just as powerful, when customer behaviors change, contextual bandits adapt automatically without marketers explicitly making updates, a contrast to the need to rebuild segments or create new journey branches. The bandit system notes changes and adjusts personalization appropriately.

This precision delivers key benefits:

- Higher conversion rates: Personalized messages aligned with individual preferences drive more conversions, like purchases

- Better customer experience: Customers receive relevant, valuable communications instead of generic broadcasts

- Improved ROI: Marketing resources focus on customers and messages most likely to drive business outcomes

- Reduced unsubscribes: Relevant, well-timed messages respect customer preferences and attention

- Automatic optimization: No manual intervention required as customer behavior evolves

From theory to practice

While contextual bandits provide a foundation for individual personalization, implementing them at enterprise scale is challenging. Marketing teams need systems that can process hundreds of customer features and thousands of possible actions while integrating seamlessly with their existing data and tools. They need tools that coordinate multiple campaigns without overwhelming customers and methods for connecting personalization with existing ML models.

Hightouch has built AI Decisioning to address these practical challenges, building a production system that uses multiple contextual bandits optimized for different business outcomes and which coordinates messages across campaigns. The result is a system that’s powering personalization for major enterprises, allowing marketers to focus on outcomes and creative approaches while AI agents optimize decision-making for every customer.

Marketing’s personalized future

We’re at the end of our journey through the technologies that drive AI Decisioning.

Part one explored the gap between the promise and reality of personalization. Customers want individual experiences, but segments and journeys can’t deliver them.

Part two introduced reinforcement learning as the foundation for AI agents that learn what works through automated experimentation.

Part three showed how multi-armed bandits balance scaling proven winners with testing new approaches, enabling more intelligent optimization than traditional A/B testing.

Part four has uncovered how contextual bandits combine the intelligent exploration-exploitation of multi-armed bandits with individual customer data to achieve real 1:1 personalization.

These technologies compose a marketing system that operates differently from traditional segment-based approaches. Instead of building granular segments and complex journeys, you configure and deploy AI agents through the Hightouch application. These agents then make decisions for individual customers automatically. With AI Decisioning, you don’t hope your marketing works for each individual; you know it’s optimized for each customer.

Contextual bandits deliver on personalization's promise not through increasingly complex rules and segments, but through AI agents that understand individual customer patterns and adapt to them continuously. Sarah gets her exclusive previews on Saturday mornings. Marcus gets his discount offers on Wednesday evenings. Every customer gets experiences that recognize who they are.

Marketing’s future is personalized, and it’s possible at enterprise scale now.

If you're interested in AI Decisioning, unlocking more value from your existing marketing channels, and increasing the velocity at which you can experiment and learn, book some time with our team today!