Early in 2024, leading AI experts like Peter Norvig and Andrew Ng predicted that 2024 would be the year of AI agents. From my LinkedIn feed, I can say confidently that they were right. “Agents” or “AI Agents” have become some of the most trending terms in technology.

While a lot of the discussion on AI agents has been centered around large language models (LLMs)—and LLM agents are extremely powerful—they aren’t the whole story. For many use cases like marketing decisioning, traditional ML technologies like reinforcement learning (RL) are a better fit than LLMs. And when RL and LLMs are used together, AI agents can be even more powerful.

We’ve done this at Hightouch with our AI Decisioning product. AI Decisioning uses reinforcement learning (RL) at its core to continuously determine the best way to engage each customer across all channels (email, push, ads, in-app/on-site, etc.) while using generative AI to provide RL with more data about a company’s content and customers.

We’ll start by explaining what AI agents are and their value in marketing. Then, we’ll contrast LLM and RL-based agents, and explain why we built AI Decisioning to use RL at its core and LLMs for specific use cases at the edges.

What are AI agents?

AI agents are software systems that autonomously perform tasks using AI.

As we explored in a recent post, agents aren't a new idea in software. They've existed for decades, have an ISO definition, and even had a hype cycle in the 1990s. That said, agents are getting a lot more powerful with the rise of ML and generative AI.

There are three things that set modern AI agents apart.

- Modern AI Agents can reason through the ideal series of tasks or actions to achieve an end goal. This is often referred to as “planning.”

- Modern AI Agents consider previous interactions, events, and data when making future decisions. This is often referred to as “memory.”

- Modern AI agents can interact with other tools and systems. This is often referred to as “tool use.”

a. In our case, AI Decisioning actually takes action on customers—for example, triggering a particular email send to a customer with dynamic variables via a Customer Engagement Platform like Iterable, Braze, or Salesforce.

We’ll explore marketing personalization as a problem, and then use these three features to help understand how LLM and RL-based agents work.

Scale smarter with AI Decisioning

Unlock the power of AI Decisioning to scale personalized marketing, boost engagement, and continuously optimize every customer interaction.

How can AI agents disrupt marketing?

One of the biggest problems in marketing is personalization–how do I use all the data I have about customers to inform how I engage with each one?

Most marketing technology products (including CDPs) focus on empowering marketing teams with self-service UIs to build rules (“audiences” and “journeys”) to decide which customers should receive what. While this is a good start, there are two key problems:

- Audiences and journeys are generalizations. You’re making decisions based on rules or “bucketing customers” rather than truly leveraging everything you know about each customer to make the optimal decision.

- Even if you have all the data in the world and self-service access, it’s not always obvious what rules to create to decide how to engage each customer. There are so many variables and possible questions to answer. It’s not feasible for a marketing team to run hundreds, thousands, or even millions of experiments to answer these questions.

a. What content will resonate best with each customer?

b. How should I balance communications between different topics & goals?

c. How should I use behavior in my app, website, store, etc. to influence these decisions?

This is what ML/AI is really good at—optimizing data-driven decisions at a beyond-human scale. To solve this with an ML/AI solution, we need to break down the problem into the types of inputs and outputs that ML/AI solutions require.

The first step is providing your platform with data. ML is not magic. It’s only as good as the data it has. AI Decisioning can sit on top of the customer data in your CDP, business tools, and data warehouses. Customer data likely includes an email, a username, unique IDs, and some demographic information, and can include event and product data (e.g., website pages visited, items added to cart, workouts completed). It could also be data about similar customers, as well as data about other messages and their performance.

Next, for ML to optimize decisions, you need to have clear, measurable goals and outcomes that you’re optimizing for. These outcomes could be as simple as clickthrough rate to down-funnel behavior like purchases or, in the case of reinforcement learning, even long-term behavior like customer lifetime value (LTV) or decreased likelihood of churn.

Finally, you need a set of actions that your ML/AI agents can take. In the case of marketing, this may be a library of emails, push notifications, web and mobile experiences, feature flags, offers, etc. For each action, there may be a range of variables. For example, emails can have various options for subject lines, content or tones, or even truly dynamic content like product recommendations or offer amounts. Each of these variables could be part of the output of a model deciding on an email message for a customer or user.

Understanding these constraints on inputs, outputs, and outcomes enables us to consider how any given ML/AI system and model(s) could be implemented to solve specific problems in marketing personalization.

We’ll do just that, looking first at LLMs before turning to reinforcement learning.

How LLM-based agents work

Let's work through a task with an LLM-based agent to understand how they solve problems, with attention to planning, memory, and tool use. LLMs are generative, so we'll focus on a use case that leverages their generative capacities for personalizing marketing content.

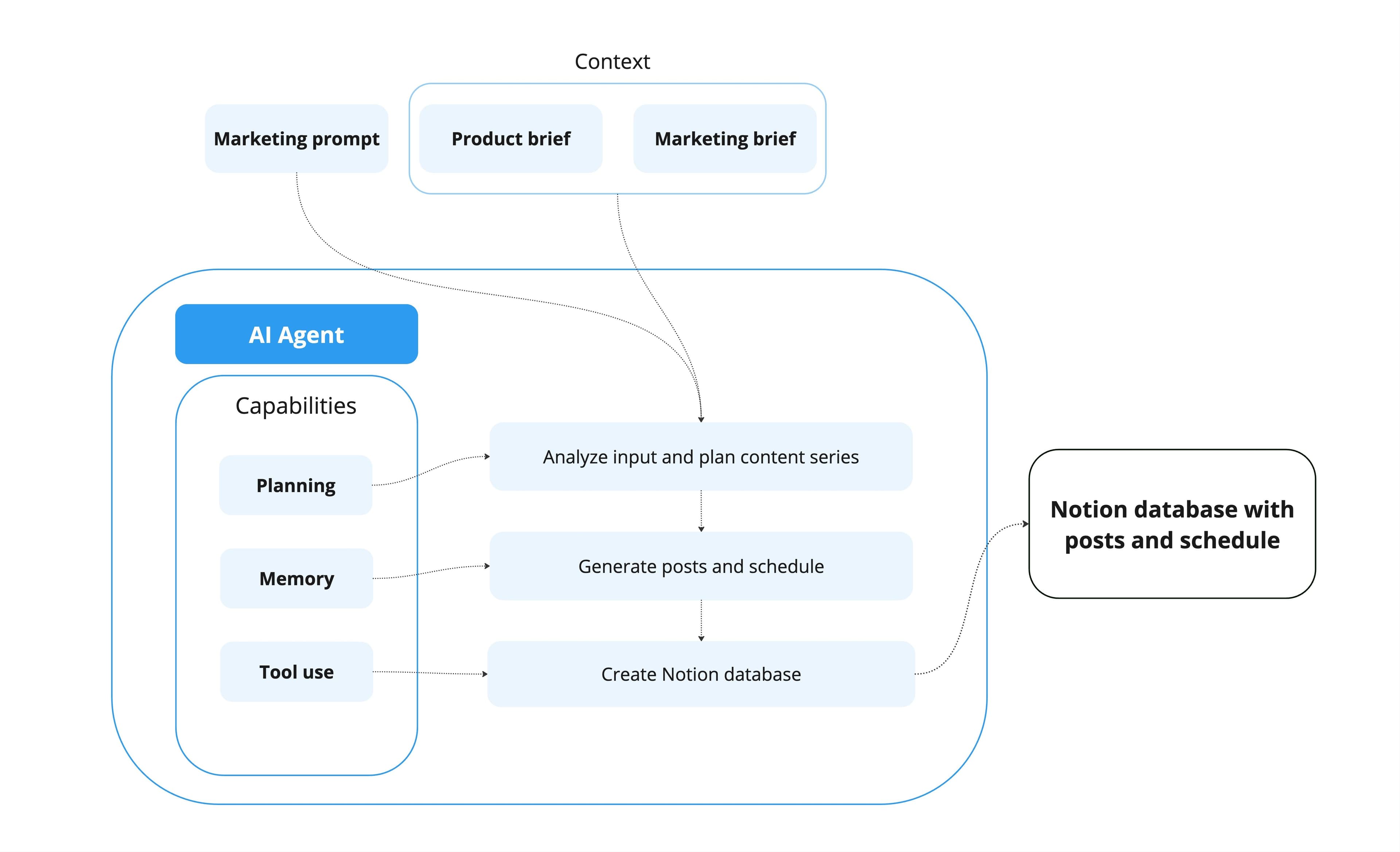

Suppose you have an AI agent built and fine-tuned for marketing, and give it this prompt: "Create a series of LinkedIn posts to explain and promote the new product features in the provided product brief. Suggest when to post each post over a week, and create a Notion database with the messages for review." You provide a product brief and a marketing positioning and messaging brief with persona information. We’ll assume that the engineering team building the agent system has written a function to create databases in Notion as a tool and includes secure API credentials access.

At a high level, the marketing agent works in a few steps. It:

- Breaks down the problem and context and plans a series of tasks.

- Generates and saves a sequence of content assets with suggested posting dates and times based on its knowledge and the provided product and marketing documents (memory).

- Uses the Notion tool function to create a new database with the generated social posts (tool use).

An LLM-based AI agent for marketing

The LLM is the engine of this workflow. It uses context and knowledge embedded through its training to break down a directive (a prompt) into tasks, generate content, save it for future use, and use tools to push this content into a team’s existing workflows. It's incredible that we have systems that can already do this.

However, this system has risks and failure points. The risk of hallucinations is well-known at this point. While agent frameworks increasingly have evaluation systems (often using other LLMs and agents), the LLM agent can still generate content that doesn't precisely follow messaging guidelines or misses your audience's nuance. This agent also depends on a supplied tool function for Notion, an additional maintenance cost for an engineering team. The workflow will fail if the Notion API has a breaking change that is not accounted for in an updated function.

There are ways to mitigate each concern, but the infrastructure effort and maintenance costs build quickly and can offset the impressive gains from an LLM-based agentic system.

There's another approach to building AI agents that learns directly from outcomes rather than relying on language models—reinforcement learning.

Building agents around reinforcement learning

We’ll consider an AI decisioning use case to understand how a reinforcement learning-based agent operates. Let's say your business runs a fitness app with a monthly subscription. Your marketing goal is to drive re-engagement for customers who have decreased use of the app through a mix of email and mobile push notifications. You're hoping to optimize your communications at an individual customer level.

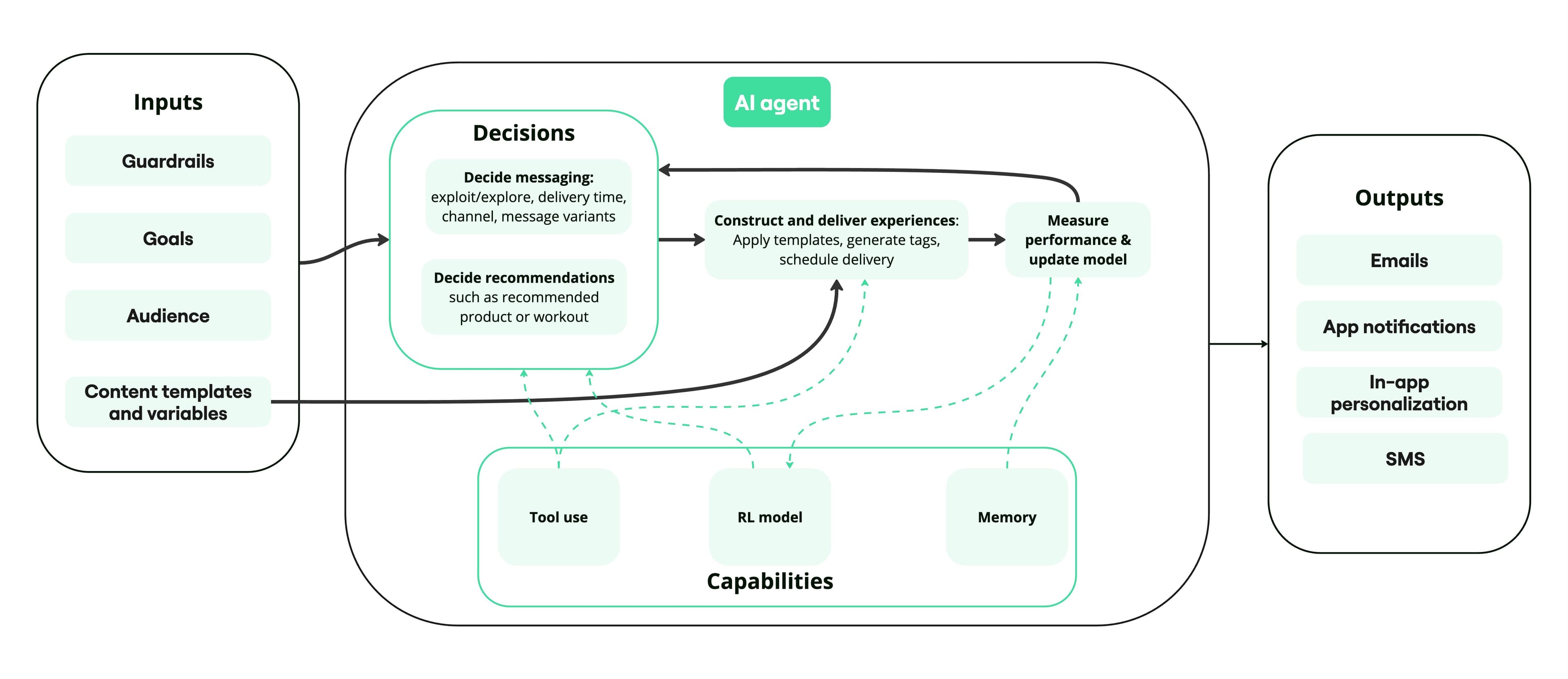

Within Hightouch's AI Decisioning product, you set up an agent with a win-back goal of twice weekly workouts targeting an audience of lapsing subscribers. AI Decisioning operates within the guardrails you create for it, including aspects like message frequency and the content of the messages. In your engagement platform—such as your ESP—you build templates for the messages you want to send, which the agent will use. In Hightouch, you also provide a set of variants—such as email subject lines, greetings, and offers—and a catalog of the workouts available through your app.

Here's what the agent does:

- In the first run, the agent decides on a delivery time, channel, and message for each customer in the audience. The message entails a decision for each variable field: subject line, greeting, and workout recommendation. The agent delegates the workout recommendation to another ML model built for product recommendations based on the customer's past workouts in the app and other customer data.

- The agent constructs messages using the template pulled from the engagement platform and schedules them for sending by integration or API (tool use). An LLM generates semantic tags for messages that enable the agent to learn across messages.

- For subsequent messages, the agent predicts rewards for potential actions and makes an exploit-explore decision. Based on the RL model used, the model chooses to use a variant or message that has historically had a positive outcome (exploit) or experiments with a new variant (explore). With contextual multi-armed bandits, the agent uses customer data in the feature matrix to make decisions, enabling true personalization. The agent sends a particular message at a specific time to each user based on their customer data in the context of other users.

- The RL model learns from the results of sent messages (memory), i.e., the model updates.

- The agent repeats the cycle, making an exploit-explore decision for each user, constructing messages and schedules, sending messages, and then learning from the results.

A reinforcement learning-based AI agent for marketing

The overall system uses several ML models, including multiple bandits. They makes decisions across content variables, channels, and timing using customer data as the context, creating personalized marketing experiences.

Fitting the model to the problem: Differences between LLM and reinforcement learning-based agents

At least two crucial differences exist between how LLM-based agents and RL-based agents work, which indicate the different types of personalization problems they can solve.

LLM and RL systems handle planning and decision-making differently. LLMs are built to predict the next token in a sequence and have emergently demonstrated reasoning. Given a problem and prompted to carry out a task, current LLMs can generate a series of tasks based on the knowledge embedded in the model and provided context, then action tasks depending on training and available functions.

The RL model at the core of AI Decisioning isn't responsible for breaking down a problem and planning tasks. Instead, the system is built to carry out a constrained set of actions. It decides on messages, their timing, and their channel and optimizes those decisions according to a user-provided goal. Its decision-making is built on the foundation of the tight feedback loop at its core, enabling the model to adjust to customer behaviors while optimizing its decisions over time.

AI Decisioning's agents are also built to execute a single large task over a long duration, while the tasks typically given to LLM-based agents are shorter and more limited. Because the system uses a reinforcement learning model, it improves over time to achieve its goal and can—to a certain degree—be set and left to run.

LLMs don’t inherently learn, though. They can improve through improving prompts, providing more or different contextual data, or fine-tuning the model on new data. To carry out any longer tasks requiring increasing accuracy or optimization based on new data, engineers must build feedback loops, context improvements, and repeated fine-tuning into the overall AI system in which the LLM is embedded. Though expensive, this engineering work is possible and may be compelling for use cases requiring flexible reasoning capacity or the interactive interfaces common to LLM-based agentic systems.

Building around RL models is the most compelling option for tasks where improvement over longer durations is needed and where the action space is constrained.

Decisioning agents for personalized marketing

The fast emergence of LLM-based agents has catalyzed conversations about AI systems that can act to achieve marketing goals. These agents excel at tasks that require reasoning and generative capacities, such as creating personalized content, creating derivatives from high-value content assets, and planning marketing campaigns. As we’ve explored, though, there are compelling reasons to look beyond LLMs when building agents for marketing personalization.

Reinforcement learning offers a different approach to agency built on continuous learning and optimization. While LLM-based agents adeptly handle complex, creative tasks, RL-based agents excel in scenarios where sustained optimization and adaptation to customer behaviors are vital. They’re well-suited to marketing personalization tasks with measurable outcomes, constrained action spaces, and the need for ongoing improvements.

The future of marketing automation lies in composite systems that can leverage both LLMs and RL, taking advantage of each where it shines. At Hightouch, we’re building AI Decisioning to deliver a truly personalized marketing experience through continuous experimentation and learning. When we talk about AI agents in marketing, it’s not just because it’s currently convenient. We’re describing systems that autonomously adapt to individual customer behaviors over time.

This is the vision for personalized marketing we're building: marketers should see each crafted experience and be surprised at how well it fits the customer; customers should feel like their experience is just for them.