As the number of data sources only continues to increase, data integration isn’t getting any easier. In fact, it’s only gotten more complex with the proliferation of cloud data warehouses. If you’re a data engineer, you know this pain all too well. Managing ETL pipelines and batch processes is a complete nightmare.

This is exactly why Apache Airflow is probably the single most important tool in the data engineer’s toolbelt. In this article, you’ll learn how Airflow works, its benefits, and how to apply it to your data engineering use cases.

What is Airflow?

Airflow is an open-source platform to programmatically author, develop, schedule, and monitor batch-oriented workflows. Built with an extensible Python framework, it allows you to build workflows with virtually any technology. Data engineers use it to help manage their entire data workflows.

Maxime Beauchemin created Airflow while working at Airbnb in October 2014. It was created to help Airbnb manage its complex workflows. Airflow is an open-source project and has become a top-level Apache Software Foundation project and has a large community of active users.

How Does Airflow Work?

Apache Airflow is an open-source platform that can help you run any data workflow. It uses the Python programming language, so it can take advantage of executing bash commands and using external modules like pandas.

Because of Airflow’s simplicity, you can use it for various things. Airflow can help with complex data pipelines, training machine learning models, data extraction, and data transformation, just to name a few things.

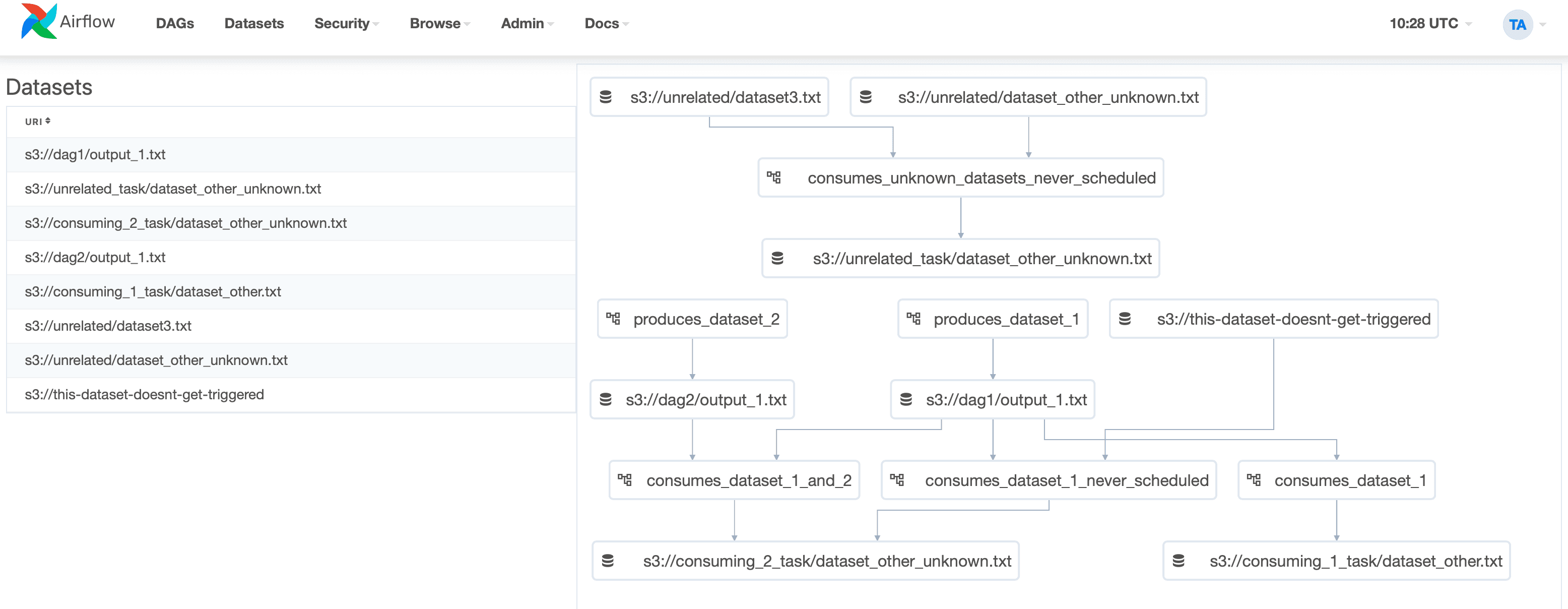

Airflow works as a framework that contains operators to connect with many technologies. The main component of how Airflow works is a Directed Acyclic Graph or DAG. A DAG needs a clear start and end and an interval at which it can be run. Within a DAG, there will be tasks. Tasks contain the actions that need to be performed and may depend on other tasks’ completion before execution.

Source: https://airflow.apache.org/docs/apache-airflow/stable/ui.html

Airflow is the solution of choice if you prefer coding over clicking. Because it uses Python, this allows you to take advantage of version control, multiple people work simultaneously, write tests to validate functionality, and extend on existing components.

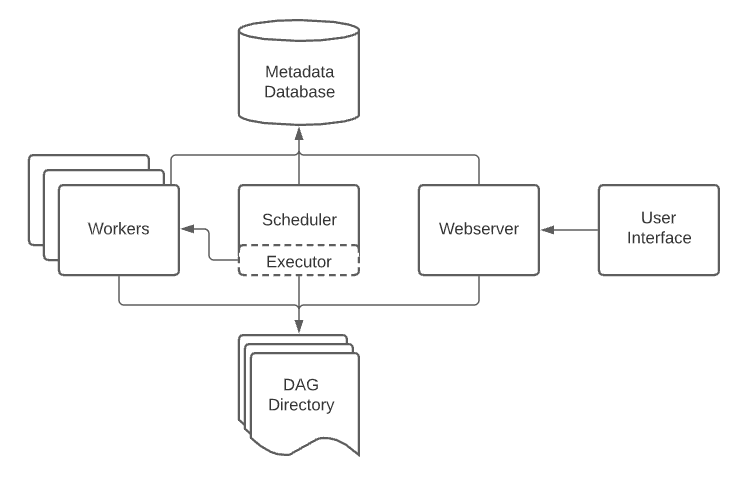

Airflow Architecture

To put all the Airflow components together, you used a DAG as the container of your workflows. DAGs are the most important component in Airflow, as they are the base for building your workflows.

Within a DAG, you have tasks containing the action items that need to be performed, and you can specify the dependencies between the tasks within the DAG. A scheduler is used to submit Tasks to the Executor, which runs them. The Executor then pushed the Tasks to the workers.

Source: https://airflow.apache.org/docs/apache-airflow/stable/core-concepts/overview.html

Four characteristics underlie the power of what Airflow can help you achieve.

- Dynamic: Because you can configure workflows in Python, it allows you to create dynamic pipeline generation.

- Extensible: Because Airflow is created in Python, you can extend it to how you see fit. You can create your own operators and executors and extend the library depending on your requirements.

- Elegant: Airflow's pipelines are concise and clear, with built-in support for parameterizing scripts through the Jinja templating engine.

- Scalable: Airflow is built with a modular architecture and uses a message queue to communicate with and coordinate an unlimited number of workers.

Airflow Components

Airflow’s underlying architecture is powered by several architectural components used to power every orchestration job. Here is a breakdown of Airflow’s underlying architecture:

- DAGs: as mentioned above, a DAG is a container for a workflow. It contains the tasks you want to complete and allows you to select the order in which they run and which tasks depend on others running first.

- DAG Runs: When executing a DAG, a DAG run gets created, and all its tasks are executed. You can schedule DAGs by a cron expression, a

datatime.timedelataobjects, or with one of the cron presets. - Tasks: Tasks are what sit in a DAG. They contain the actions that need to be executed. Each task can have downstream and upstream dependencies, which is key to creating effective workflows. There are three different types of tasks: Operators, Sensors, and TaskFlow-decorated tasks.

- Operators: An operator is a way of accessing predefined templates to use written code. They are Python classes that encapsulate logic to do a unit of work. Some of the most frequently used operators are PythonOperator (allows you to execute a Python function), BashOperator (allows you to execute a bash script), and SnowflakeOperator (allows you to execute a query against a Snowflake database).

- Sensors: Sensors are a special type of operator that waits to meet a certain condition. When the condition is met, it’s marked as successful, and the downstream tasks are executed. There are two different modes you can select for sensors. The default mode is ‘poke’ which takes up a worker slot for its entire runtime. And ‘reschedule’ which only takes up a worker slot when it’s checking and sleeps based on a set time interval between checks.

- TaskFlow: If you write your DAGs mainly in Python, you can use TaskFlow API to reduce the amount of boilerplate code by using the ‘@task’ decorator. TaskFlow helps move inputs and outputs between your tasks using XComs.

- Schedulers: A scheduler monitors all tasks and DAGs and triggers Tasks when any dependencies are complete. At a set interval (the default being one minute), the scheduler checks the results from DAGs and sees if any of the active tasks can be executed.

- Executor: Executors are needed to run tasks. They have a common API, and you can swap executors based on your installation needs. There are two types of executors, ones that run locally and ones that run their tasks remotely.

- XComs: Xcoms stands for cross communications. They are a way of letting Tasks communicate with each other, as Tasks are completely isolated and may be running on a different machine.

- Variables: Variables are a way of accessing information globally. They are stored by key values and can be queried from your Tasks. You typically use them for overall configuration rather than passing data between Tasks or Operators.

- Params: Params are a way of providing runtime configurations to tasks. When a DAG is triggered manually, you can modify its Params before the DAG run starts. A warning message will be displayed if the values supplied don’t pass validations instead of creating the DAG run.

Airflow Benefits

Airflow can offer many benefits to help you manage your complex data pipeline workflows.

- Ease of use: The learning curve to begin working with Airflow is quite small. Once you understand the different components and are familiar with Python, you can get started quickly.

- Open-source: With Airflow being open-source, it comes with a lower cost, gives people the ability to contribute improvements, and has a community to offer support.

- Graphical user interface: To make managing your workflows easier, Airflow has a graphical UI where you can view the workflow you’ve created and see the status of ongoing and completed tasks.

- Integrations: Airflow comes with many pre-built operators that allow you to integrate with cloud platforms, such as Google, AWS, and Snowflake and popular databases.

- Automation: Airflow aids with automating your workflows. Once set up, you can set them to be active at a set schedule or be run automatically.

- Centralized workflow/orchestration management: Airflow offers a single place to set up all your workflows and provides a rich user interface that makes seeing, orchestrating your data pipelines easier, and handling complex relationships between tasks.

Airflow Use Cases

You can use Airflow for a lot of things due to its flexibility. However, here are some common use cases you can do with Airflow:

- Data Integration (ETL/ELT): You can use Airflow for data integration. You can set up DAGs for each of your data pipelines so it connects to a data source, such as your CRM, your social media accounts, or your ad platforms, and create tasks that can retrieve the required data and transfer it to a data warehouse.

- Workflow automation across systems (orchestration): Because of the multiple operators that Airflow gives you access to, you can connect across multiple systems and automate workflows by setting up relevant DAGs.

- Scheduling: You need to run data pipelines at specific intervals. Rather than manually running them yourself daily or weekly, Airflow allows you to set your DAGs to be executed at a certain interval, automatically completing tasks.

- Alerting: Because you can specify dependencies of the tasks in your DAGs, you can build in control flow. If a particular task fails, you can set alerts to notify you via email, so you can investigate what may have caused the failure.

Final Thoughts

Apache Airflow has been around for a long time, but it's still one of the single most prominent and important technologies in the data engineering world because of the unique challenges it addresses. Airflow creates a centralized place where you can easily manage, orchestrate, and schedule all of your ETL jobs and batch processes.

Airflow is the most widely adopted open-source tool for data orchestration. Data integration is extremely complex, and the ability to visually define pipelines in DAGs is extremely useful. Airflow is essentially in a league of its own acting as the ultimate framework in the data engineering world.